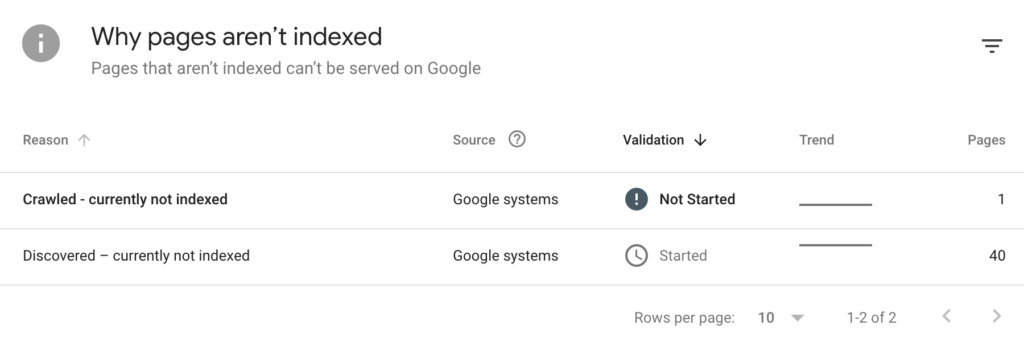

Today I will show you how to fix the “Crawled — Currently Not Indexed” and “Discovered – Currently not indexed” issue in Google Search Console.

We’ve all been there. You set up a new website, you publish your content and then time goes by and – crickets. Nothing happens. Google doesn’t seem to pick up and index your new site. This can be super frustrating.

It is very common though and I am going to teach you different ways to fix it, through a case study.

If you have trouble with other Google Search Console issues, have a look at the common Indexing issues in Google Search Console and how to solve them.

How to fix the “Crawled — Currently Not Indexed” and “Discovered – Currently not indexed” issue.

I set up a new site and after several weeks I continued seeing the same indexing statuses:

First of all, please remember that the stages for a website to be crawled are:

- Discovered

- Crawled

- Indexed

In Google Search Console, the terms “Discovered,” “Crawled,” and “Indexed” refer to different stages in the process of how Googlebot, Google’s web crawling bot, interacts with your website and its pages. Here’s what each term means:

Discovered:

This refers to the number of pages from your website that Googlebot has found or encountered while crawling the web. When Googlebot discovers a new page, it means it has come across a link to that page, either from within your website or from external sources. Discovered pages may or may not be indexed by Google.

According to Google, the reason why a page is discovered but not indexed is usually that Google wanted to crawl the URL but this was expected to overload the site; therefore Google rescheduled the crawl. This is why the last crawl date is empty on the report. Google’s further explanation can be found here.

Crawled:

Once Googlebot discovers a page, it will visit and analyse the page’s content, including text, images, and other elements. Crawling involves fetching the web page’s HTML code and processing it to understand its structure and content. Googlebot follows links on the page to discover additional pages to crawl. When a page is crawled, it means Googlebot has accessed and analysed its content.

Indexed:

When a page is indexed, it means Googlebot has analysed the page’s content during the crawling process and added it to Google’s index, which is a massive database of web pages. This is where we want to be, as indexed pages are eligible to appear in Google’s search results. Being indexed does not guarantee that a page will rank highly in search results, but it makes the page eligible to be considered for ranking based on its relevance to search queries.

5 solutions for the Crawled/Discovered — Currently Not Indexed issues in GSC:

We are going to go through 5 solutions to this issue:

Solution 1: Request manual indexing

To request a manual indexing of pages in Google Search Console, follow these steps:

Navigate to the URL Inspection tool: In the left-hand menu, click on “URL inspection.” This tool allows you to inspect a specific URL on your website.

Enter the URL: In the URL inspection tool, enter the URL of the page you want to request indexing for. Make sure to enter the full URL, including the “https://” or “http://” prefix.

Perform the inspection: Click on the “Enter” or “Inspect” button to initiate the inspection. Google Search Console will analyse the page and provide information about its current indexing status.

Review the indexing status: After the inspection, you’ll see the indexing status of the page. It will indicate whether the page is indexed, not indexed, or has any issues preventing indexing.

Request indexing: If the page is not indexed or you want to request a fresh indexing, click on the “Request Indexing” button. This prompts Google to manually review the page and consider it for indexing.

Confirm the request: A dialog box will appear asking you to confirm your request. Make sure to review the information and click on the “Request” or “OK” button to proceed.

Wait for indexing: After requesting manual indexing, Google will process the request, but the exact timing may vary. It may take some time for Googlebot to crawl and index the requested page. In my experience this can take a couple days on average for new websites. However, it may take a few hours or days for the page to be indexed, depending on various factors.

Monitor indexing status: You can monitor the indexing status of the page by revisiting the URL Inspection tool and checking the latest information.

Solution 2: Add categories in permalinks.

How adding categories to your pages or blog posts and showing the category name in the URL can help get your site indexed?

Example:

URL without category:

- https://example.com/paella

URL with category:

- https://example.com/recipes/paella

- https://example.com/recipes/tortilla

Adding categories to your pages or blog posts and showing the category name in the URL can help improve the indexability of your site in the following ways:

- Targeted crawling and indexing: When search engines encounter URLs with category names, it helps them categorise and prioritise the crawling and indexing process. Search engines may recognize the importance of category pages and allocate more resources to crawl and index them. This can lead to faster and more comprehensive indexing of your site’s content.

- Organised site structure: Categorising your pages or blog posts helps create a logical and organised site structure. It allows search engines to understand the hierarchy and relationships between different content sections on your website. A clear site structure makes it easier for search engines to crawl and index your pages effectively.

However, having multiple categories in WordPress might affect SEO in some cases. You can have a look at the article to learn more.

URL readability and user experience: Including category names in the URL can make it more readable and user-friendly. When users see descriptive category names in the URL, they get a better understanding of the content they can expect on that page. This can increase click-through rates from search engine result pages (SERPs) and improve user engagement on your site. User signals, such as click-through rates and time spent on page, can indirectly influence search engine rankings.

Internal linking and page visibility: Categories often serve as a navigation element on your site, enabling users and search engines to discover related content. By linking your pages or blog posts to their respective categories, you create a network of internal links that search engines can follow. Internal linking helps search engines understand the depth and breadth of your content, ensuring that important pages are not missed during the crawling and indexing process.

Remember, while adding categories and including them in URLs can provide benefits for indexability, it’s essential to maintain a balanced approach. Avoid creating overly complex URL structures with excessive subcategories or keyword stuffing. Focus on creating a logical and user-friendly organisation that enhances both search engine visibility and user experience.

Solution 3: Check for redirect issues.

Excessive redirects might overdo the crawl budget.

To find redirects in your website and fix indexing issues in Google Search Console (GSC), you can follow these steps:

Navigate to the Coverage report: In the left-hand menu, click on “Coverage” under the “Index” section. This report provides insights into the indexing status of your website’s pages.

Identify indexed but not submitted in sitemap: In the Coverage report, look for any pages marked as “Indexed, not submitted in sitemap.” These pages are indexed by Google but are not included in the sitemap you have submitted to Google. It’s possible that these pages are being redirected to other URLs.

Click on the affected URL: Click on the URL marked as “Indexed, not submitted in sitemap.” This will provide more details about the specific page and the issue.

Review the issue: In the page details, you will see information about the issue affecting the indexing, including any redirects. Look for any notes or messages that mention redirects.

Inspect the URL: Click on the “Inspect URL” button to use the URL Inspection tool. This tool provides detailed information about a specific URL, including any redirects.

Analyse the URL Inspection results: In the URL Inspection tool, you will see the URL’s current indexing status and any issues detected. Look for messages or explanations related to redirects.

Check redirect details: If a redirect is detected, the URL Inspection tool will provide information about the redirect type (e.g., 301 or 302), the redirect destination URL, and any chain of redirects if applicable. Make note of this information for further investigation and fixing.

Fix the redirects: To address the redirect issue, you need to identify the source of the redirect and make the necessary changes. This might involve modifying your website’s server configuration, content management system, or plugins. Ensure that the redirects are set up correctly and that they lead to the desired destination URLs.

Request indexing: After fixing the redirects, you can request indexing for the affected URL to ensure that Google recrawls and updates the index accordingly. In the URL Inspection tool, click on the “Request Indexing” button.

Monitor indexing status: Keep an eye on the indexing status in Google Search Console to confirm that the redirect issue has been resolved and that the page is now indexed properly.

You can also use external tools to check redirects in your website, like this one:: https://www.redirect-checker.org/index.php

Solution 4: Orphan pages.

If the only way to discover your new page is from the sitemap and it has no internal links, Google may consider it unimportant. My proposed solution is to add more internal links to make sure all pages are linked from at least one page.

Specially add links from the pages that are already indexed in your site, if any.

Add related posts widget in wordpress at the end of the page.

How to find orphan pages in your website to fix indexing issues in GSC?

To find orphan pages in your website and fix indexing issues in Google Search Console (GSC), you can follow these steps:

Navigate to the Coverage report: In the left-hand menu, click on “Coverage” under the “Index” section. This report provides insights into the indexing status of your website’s pages.

Identify errors or warnings: Look for pages marked with errors or warnings in the Coverage report. These could indicate potential indexing issues, including orphan pages.

Click on the affected URL: Click on the URL marked with an error or warning. This will provide more details about the specific page and the issue.

Review the issue: In the page details, you will see information about the issue affecting the indexing, such as a “Page not found” or “Soft 404” error. This could suggest that the page is not properly linked within your website.

Inspect the URL: Click on the “Inspect URL” button to use the URL Inspection tool. This tool provides detailed information about a specific URL, including its indexability and any crawl errors.

Analyse the URL Inspection results: In the URL Inspection tool, you will see the URL’s current indexability status and any issues detected. Look for messages or explanations related to the page being an orphan or not being properly linked.

Check internal links: If the page is identified as an orphan, it means it lacks internal links that connect it to other pages on your website. Review your website’s structure and navigation to identify the missing internal links.

Add internal links: To address the orphan page issue, you need to add internal links from relevant pages within your website to the orphaned page. Ensure that these links are properly placed and provide a clear path for search engines and users to discover the orphaned page.

Request indexing: After adding internal links, you can request indexing for the orphaned page to ensure that Google recrawls and includes it in the index. In the URL Inspection tool, click on the “Request Indexing” button.

By following these steps, you can identify orphan pages in your website and address any indexing issues related to them. Regularly monitoring the Coverage report and adding internal links to orphaned pages will help ensure that all your website’s pages are discoverable and properly indexed by Google.

Solution 5: Low quality content.

AI-generated content and AI writing tools are gaining popularity, but they rarely create useful content without human involvement.

How low quality content can impact indexing in GSC?

Low-quality content can have a negative impact on indexing in Google Search Console (GSC). Here’s how:

Limited or no indexing: Google aims to provide users with high-quality and relevant content in its search results. If your website contains low-quality content, Google may choose not to index those pages or may reduce their visibility in search results.

Indexing and ranking penalties: Low-quality content can trigger penalties from search engines, resulting in decreased visibility or removal from search results altogether. Google’s algorithms assess the quality of content based on factors such as relevance, uniqueness, accuracy, and user experience.

User experience signals: Google takes user experience into account when determining indexing and ranking. Low-quality content often provides little value to users, leading to a negative user experience. Search engines track user signals like high bounce rates, low time on page, or frequent user dissatisfaction with your content. These signals can impact the indexing and ranking of your pages, reducing their visibility in search results.

Duplicate or thin content issues: Low-quality content can manifest as duplicate content or thin content. Duplicate content refers to identical or substantially similar content appearing on multiple pages or across different websites. Thin content refers to pages with minimal or insufficient content that fails to provide substantial value. Google may choose to index only one version of duplicate content or may disregard pages with thin content altogether.

Negative impact on crawl budget: Google assigns a crawl budget to each website, which represents the maximum number of pages Googlebot will crawl during a given timeframe. If your website contains a significant amount of low-quality content, Googlebot may spend more time crawling and indexing these pages, leaving fewer resources to crawl and index your high-quality content.

To maintain a positive impact on indexing in GSC, it is crucial to focus on creating high-quality, valuable, and unique content that meets the needs of your target audience. By providing relevant and engaging content, you can improve your chances of getting your pages properly indexed and ranked in Google’s search results.

I am not saying that you should not use AI for your content. It can be useful to help you automate the most repetitive tasks but you should always keep your audience in your mind and provide them with content that is actually useful.

Learn here how AI can boost your marketing efforts and save you time without compromising in quality and running into these issues.

This blog is an example of this. Instead of regurgitating content from other web development or SEO sources, I provide with real life examples of things that work (or not work) on my sites. I am providing a human, real perspective that I think will be valuable to my readers.

By following these 5 solutions above, you can expedite the indexing process for specific URLs on your website via Google Search Console.

If you are planning to write some content for your site, you might find these most popular resources useful:

Free Content Marketing Daily planner

How to create a content marketing strategy

How to do SEO and Content for B2B vs B2C Selling Proposition

What content marketing you should do according to your business model

What is programmatic SEO and how to use it to boost your marketing efforts

Differences between SEO for transactional (eCommerce or services) vs informational sites

Stay purrfectly tuned for our next meow-velous marketing adventure, my fabulous feline friends!

Missing me already, dear human? You can find me on X and Facebook.

Good luck!

Moxie